Evaluation Instruction

The Format of Submission Result

The estimated 6 DoF camera poses (from camera coordinate to the world coordinate) are required to evaluate the performance. Considering that there is a certain randomness of estimation, each sequence is required to be run for 5 times, resulting in 5 pose files and 5 running time files. We will select the median result from all five results for evaluation. It should be noted that the format for each pose file is described as follows:

timestamp[i] p_x p_y p_z q_x q_y q_z q_w

where (p_x p_y p_z) is the camera position,

and the unit quaternion (q_x, q_y, q_z, q_w) is the camera orientation.

You should output the real-time poses after each frame is processed (not the poses after final global optimization),

and the output of poses should be in the same frame rate as the input camera images (Otherwise, the completeness evaluation would be affected).

The format for each running time file is described as follows

timestamp[i] t_pose

where t_pose denotes the system time (or cumulative running time in seconds and at least three decimal places, even for black frames in D6) when the pose is estimated.

Submission

Please submit a zip file containing all the poses and running time files. The structure of zip file should follow the form described as follows:

YourSLAMName/sequence_name/Round-pose.txt

YourSLAMName/sequence_name/Round-time.txt

e.g.

MY-SLAM/C0_test/0-pose.txt

MY-SLAM/C0_test/0-time.txt

You can click here to download the example.

Evaluation

We evaluate the overall performance of a SLAM system considering tracking accuracy, initialization quality, tracking robustness, relocalization time and the computation efficiency. The criteria are as follows:

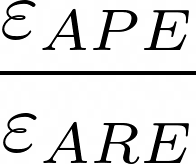

for absolute positional / rotational error

for absolute positional / rotational error

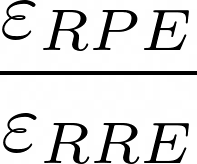

for relative positional / rotational error

for relative positional / rotational error

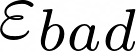

for the ratio of bad poses (100% - completeness)

for the ratio of bad poses (100% - completeness)

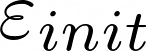

for the initialization quality

for the initialization quality

for the tracking robustness

for the tracking robustness

for the relocalization time

for the relocalization time

for more detail please refer to the paper[1].

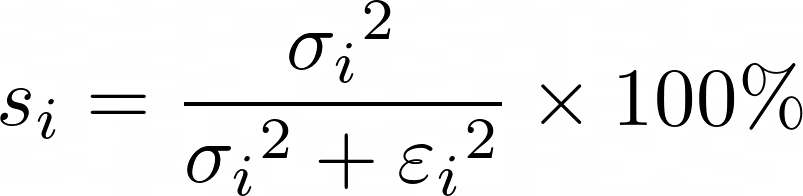

We convert each criteria error  into a normalized score by:

into a normalized score by:

where  is the variance controlling the normalization function shape.

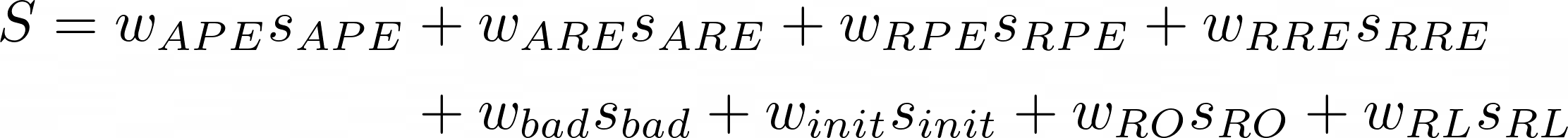

The complete score is a weighted sum of all the individual scores as:

is the variance controlling the normalization function shape.

The complete score is a weighted sum of all the individual scores as:

where weight  and

variance

and

variance  for each criteria are listed below:

for each criteria are listed below:

|

|

|

|

|

|

|

|

|---|---|---|---|---|---|---|---|

| 1.0 | 1.0 | 0.5 | 0.5 | 1.0 | 1.0 | 1.0 | 1.0 |

|

|

|

|

|

|

|

|

| 55.83 | 2.48 | 2.92 | 0.17 | 2.38 | 1.85 | 0.95 | 1.42 |

The variance  list above is obtained by computing the median of our previous evaluation results,

which contain the results of 4 VI-SLAM systems (MSCKF, OKVIS, VINS-Mono, SenseSLAM) evaluated on our previous released dataset.

You can evaluate your SLAM system with our training dataset using the evaluation tool.

list above is obtained by computing the median of our previous evaluation results,

which contain the results of 4 VI-SLAM systems (MSCKF, OKVIS, VINS-Mono, SenseSLAM) evaluated on our previous released dataset.

You can evaluate your SLAM system with our training dataset using the evaluation tool.

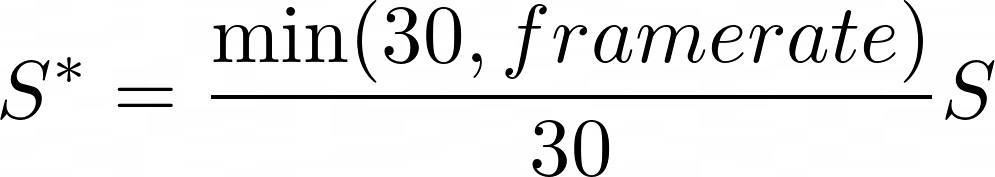

In the final round competition, we will test all systems on benchmarking PCs with the same hardware configuration. The running time will be taken into account for computing the final score according to the following equation:

where  denotes the average framerate of the system.

It should be noted that not all sequences are evaluated for all the critera.

The corresponding critera for the sequences are listed below:

denotes the average framerate of the system.

It should be noted that not all sequences are evaluated for all the critera.

The corresponding critera for the sequences are listed below:

| Sequences | Corresponding Critera |

|---|---|

| C0 - C11, D8 - D10 | APE, RPE, ARE, RRE, Badness, Initialization Quality |

| D0 - D4 | Tracking Robustness |

| D5 - D7 | Relocalization Time |

Acknowledgement

-

[1] Jinyu Li, Bangbang Yang, Danpeng Chen, Nan Wang, Guofeng Zhang, Hujun Bao. Survey and evaluation of monocular visual-inertial SLAM algorithms for augmented reality. Journal of Virtual Reality & Intelligent Hardware, 2019, 1(4): 386-410. DOI:10.3724/SP.J.2096-5796.2018.0011.